I keep getting asked “Is 4K worth it?” Here’s my response at this time (March 2015).

4K, also known as Ultra High Definition (UHD) is the latest “mainstream” technology for TV, movies, cameras, and even video games. People keep asking me if they should buy a UHD TV or Monitor. Let’s dive into the topic.

What is 4K/UHD?

So what is 4K and UHD exactly?4K originally referred to the amount of pixels going horizontally across a screen, which was confusing since HD (720p and 1080p) referred to the amount of pixels going vertically across a screen. The resolution was 4096 x 2160, though the resolution varies by format. UHD is the 16×9 version that’s been slightly chopped down horizontally. The resolution for UHD is now set to 3840 x 2160.

A quick warning: These days you will see 4K, UHD, Ultra High Definition, or 2160p. They’re basically all the same unless you’re looking at very high end cameras for making movies.

Is It Worth It?

Is 4k worth it at this time? As of March 2015, roughly a year and a half after the introduction of UHD, I’d say it’s still not worth it. However, that could change in the future, just like how 720p and 1080p TVs took awhile to catch on. Still, I think it will take longer for some forms of media.

UHD TVs and Monitors are steadily dropping in price, and the technology behind them continues to rise. That’s all a great sign for a shift to UHD. When 4K first hit the market, the TVs and monitors had outrageous prices, and the refresh rate was terrible. There wasn’t even an HDMI standard to support 4K at 60 Hz until the recent introduction of HDMI 2.0. Before it was always 4K at 30 Hz, which is half the refresh rate of a standard HDTV and even some SDTVs.

Cameras

When it comes to cameras, you can always take pictures or record at 4K, then down sample it to 1080p to keep the quality of the image high. YouTube also has support for a 2160p option. This is more of an editor’s choice, though.

TV Shows and Movies

When it comes to TV Shows and Movies, there aren’t that many options for 4K. There are very few Blu Rays out there that can upscale to 4K, but it’s still just an upscale. The small pool of 4K Blu Rays are mastered at 4K, but they’re really 1080p upscaled to 4K. Basically, it’s not a native 4K movie, so the quality isn’t as good as it should be.

You can watch some videos at 4K on YouTube, but you will need a strong internet connection to load those videos. Most 2160p YouTube videos are GoPro videos and vlogs, but you may be able to find internet-based shows that are shot at 4K.

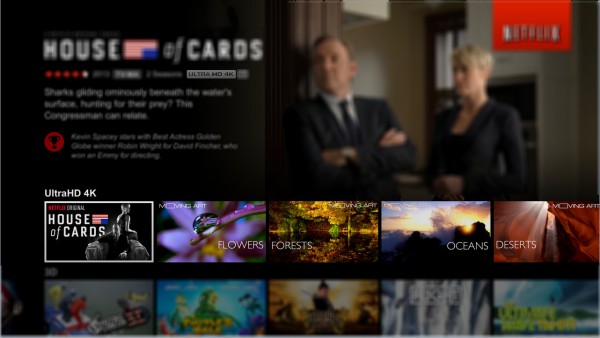

Netflix is also an option for streaming 4K TV shows and movies, but there aren’t too many titles available at this time. Furthermore, you will need to jump through a few hoops to stream 4K. You can read an FAQ from Netflix here. You will need a better plan from Netflix, a specific TV and streaming device that supports 4K video (if your computer is not hooked directly up to your TV), and at least 25 Mbps download speed for your internet, which is uncommonly high in the USA though may be common in other countries.

Video Games

Video games are a completely different beast when it comes to 4K. Playing movies is one thing, but rendering a high quality video game at such a high resolution is another.

To play video games at 4K, you will need a PC with a high end processor, graphics card, and a power supply big enough to use those without blowing up. Obviously, you will need the other essential parts like a case, RAM, motherboard, and hard drive.

Next, you’ll need to find a game that actually supports 4K resolutions. Not all video games support the 3840 x 2160 resolution. Luckily, some developers have updated their older games to support UHD. Once the resolution is set, you will have to tweak the other settings to match your hardware’s performance. Some video games really push the power of a PC to its limits. They may have terrific lighting options, high resolution textures, or there may be a lot of things active on the screen at once. Depending on the game and your hardware, you may need to scale down a few option. A single Nvidia Geforce GTX 980 (a recent $500 graphic card) can run some games at 4K and 30 frames per second if a few options are set below ultra. Benchmarks here.

If you have a 4K TV, you do not need to buy a separate 4K monitor for your computer. You can simply plug your computer (using your graphics card’s output) directly to your TV. However, if the TV only has HDMI inputs, and your graphics card doesn’t have a newer HDMI 2.0 port, you will only get to a max of 4K at 30Hz. DisplayPort on the other hand supports 4K at 60Hz, but DisplayPort is mostly found on monitors, not TVs.

You may have noticed how I have not mentioned consoles (Xbox, PlayStation, Nintendo) running games at 4K yet. That is because console video games try to use very cheap hardware to run video games. Nobody want to buy a console for $1000, which is probably the price it would be if they sold one at a loss around now. Remember, you’re not just buying the hardware, you’re buying the development costs and the software that runs the console. PC gamers were able to make budget PCs that were about the same price as the Xbox One when it first released, but the PCs were just a tad bit weaker. The main reason was because Windows software alone costs $100.

Do I Have to Make the Switch?

You can never be sure where the future will take us. Honestly, I hope 4K doesn’t become a real standard. I don’t think a higher pixel count is worth it unless you have a TV/monitor over 50 inches, or unless you’re about a foot away from your screen. I think companies should focus more on screen quality like better colors, contrast, and refresh rates.

The switch from standard definition to high definition was huge in the US (and some other countries) mainly because of the switch from interlaced to progressive scan technology. Interlaced makes any resolution visually worse than its progressive scan counterpart. That’s why 1080p looks better than 1080i. Of course, 480 pixels wasn’t a large amount anyways. Jumping up to 1080 was a nice leap, but continuing to go further to 2160 seems unnecessary for the most part.